Writing of an Article and Calibration Evaluations

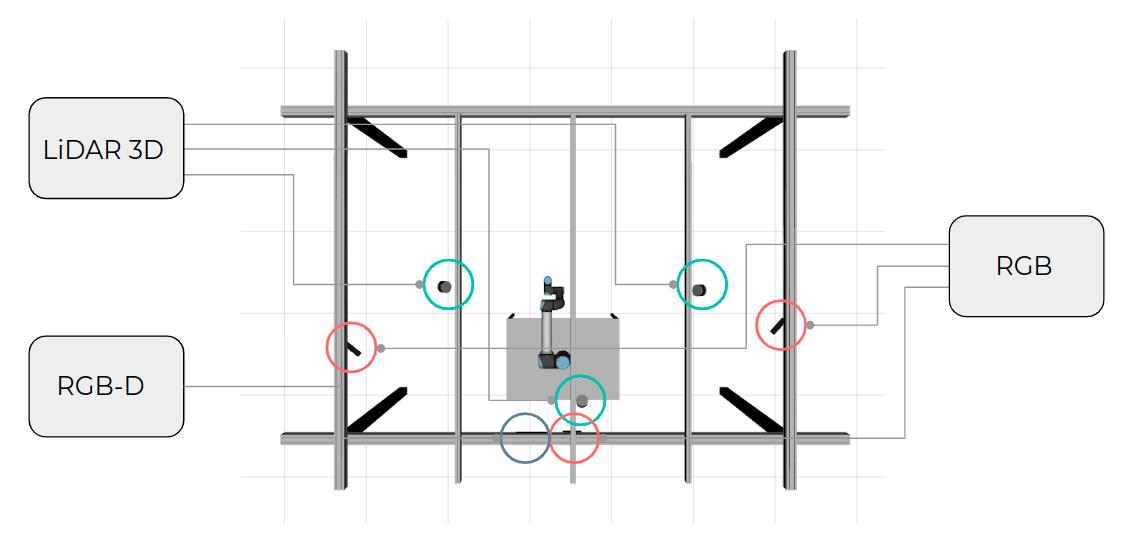

LarCCell is a collaborative cell that serves as a case of study for this project. The ultimate goal is that it becomes a tridimensional space where it is safe for robots and humans to cooperate and perform common tasks. To achieve this goal, it is necessary to have a great abundance of sensors to monitor the space and to have redundancy to foresee occlusions. The current setup of LarCCell is shown in the following image.

This setup was used as the base to an article to be submitted on IEEE Transaction on Industrial Informatics.

This article explains the fundaments of our sensor-to-pattern calibration approach and details the calibration results for all the pairs of sensors in the current setup. For RGB-RGB pairs, we also used the OpenCV calibration toolbox and the Kalibr camera calibration to calibrate our dataset and compare results, from which we concluded that although Kalibr outputs better results in some camera pairs, it was not able to calibrate all the pairs because the overlapping of the camera's fields of view was minimal and Kalibr requires that the cameras see the calibration pattern at the same time.

For the LiDAR-LiDAR pairs and depth-LiDAR pairs we also used our dataset to calibrate the pairs with the ICP algorithm, which gave worse accuracy than ours in all pairs, once again proving that our calibration works.

We concluded that our calibration framework is able to calibrate sensors with minimum overlap, and also partial calibration pattern detections. Also, our framework uses a sensor-to-pattern approach instead of a sensor-to-sensor approach, which allowed us to calibrate the seven sensors simultaneously without requiring a pair-wise calibration, which would mean calibrating 21 pairs instead of the 7 sensors that we calibrate with our method.