Writing the manuscript "New Methodology to Calibrate Depth Sensors in Multi-Modal Dynamic Setups"

This manuscript describes the addition of the depth modality to the ATOM framework. The methodology is tested in two separate systems: one RGB-D camera and one complex dynamic system with several sensors. For more detailed information about the experiments and methodology, please read the manuscript.

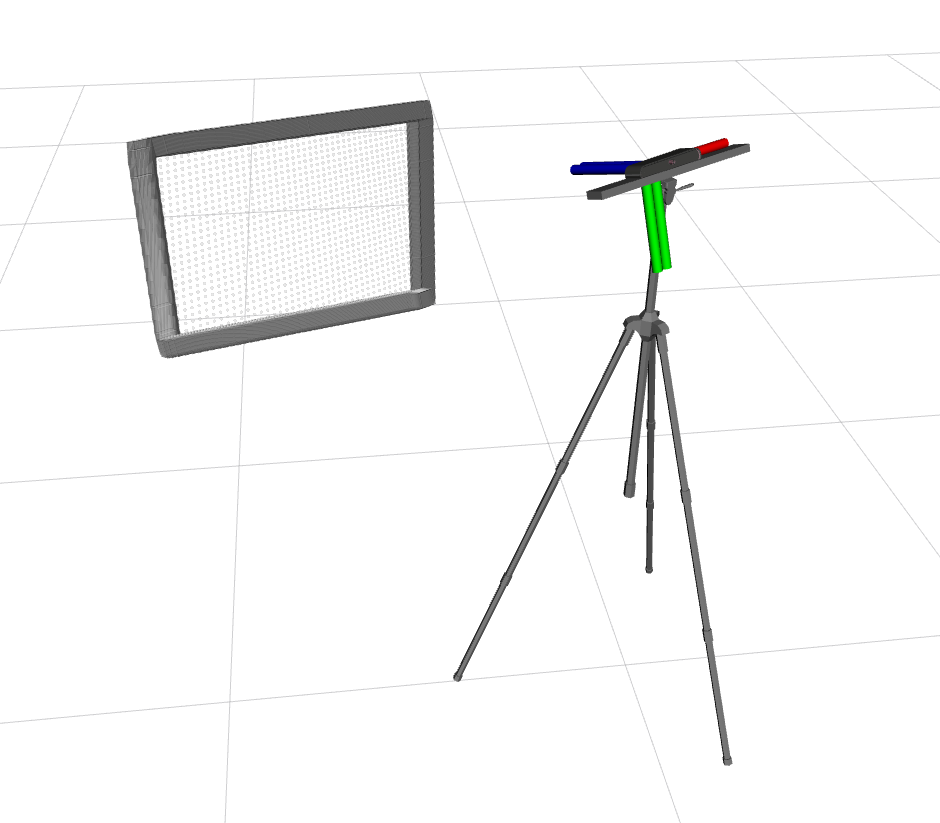

The first experiment described in the manuscript refers to a simple RGB-D system, as shown in the image.

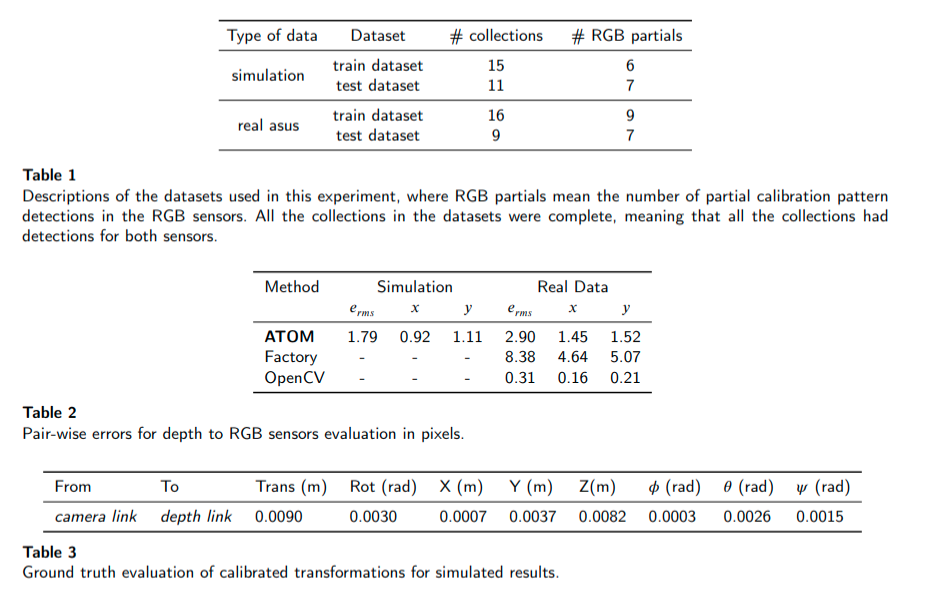

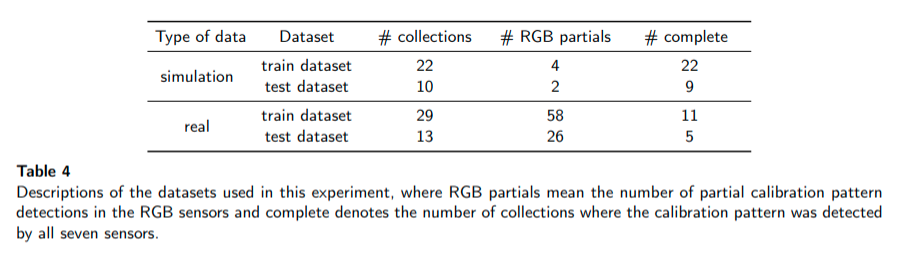

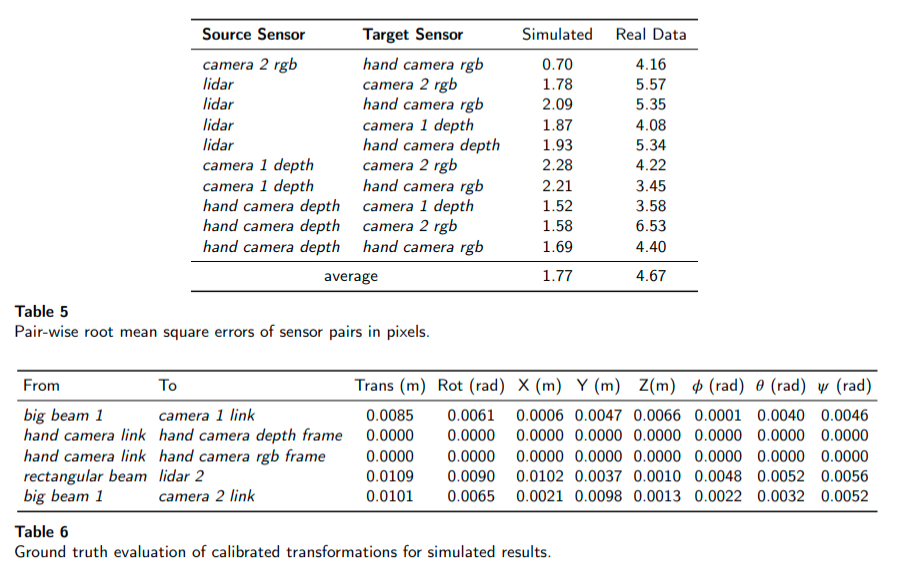

This system was calibrated in both real and simulated environment and the results were compared with the factory calibration and with other algorithms. The following tables descibres the conducted experiments and results so far.

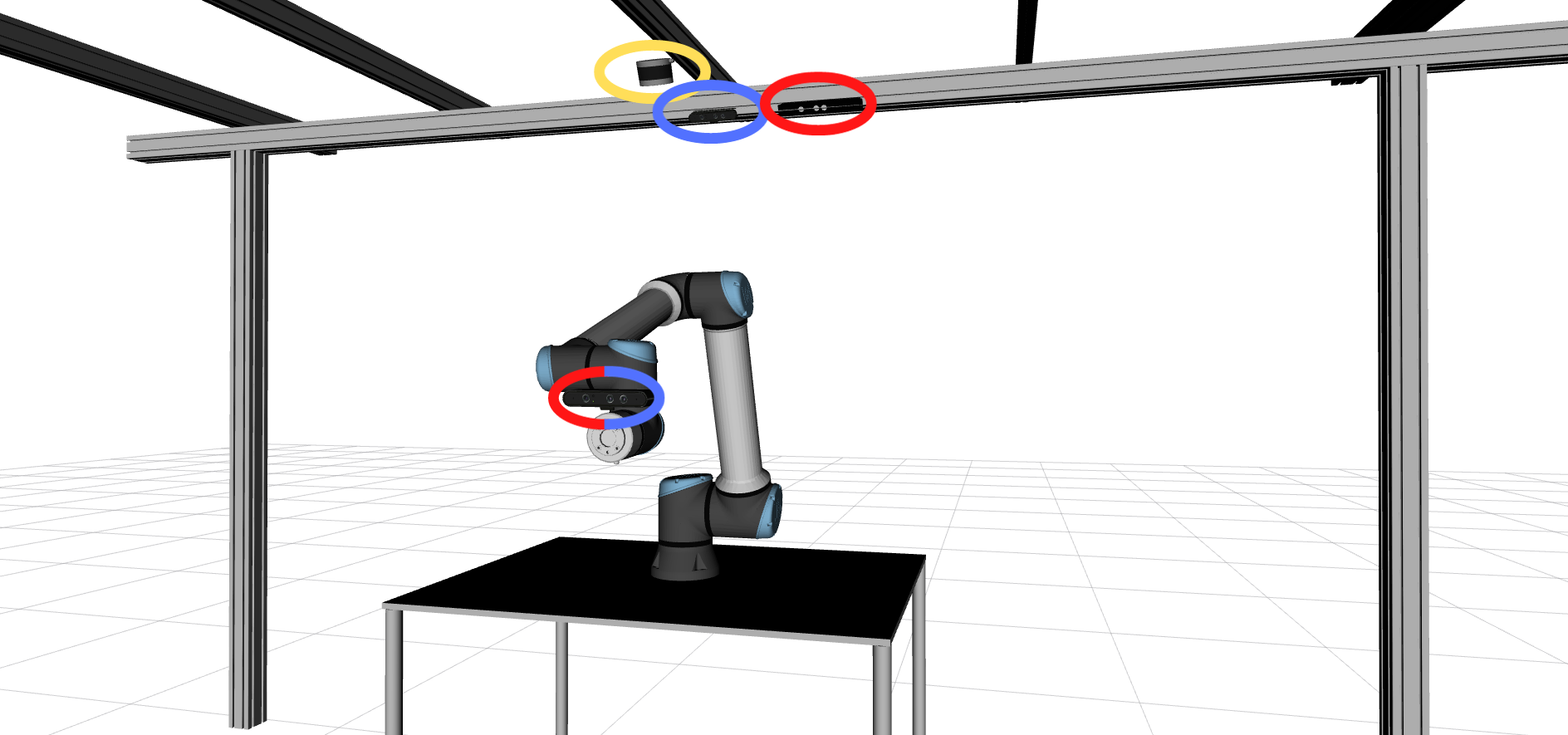

The second experiment referes to a complex system with three fixed sensors: RGB, LiDAR and depth camera; and two dynamic senors installed in the robotic arm: RGB and depth. Subsequently, this proves that the methodology is able to calibrate a combination of dynamic and non-dynamic sensors. The following image shows a simulated representation of the system used.

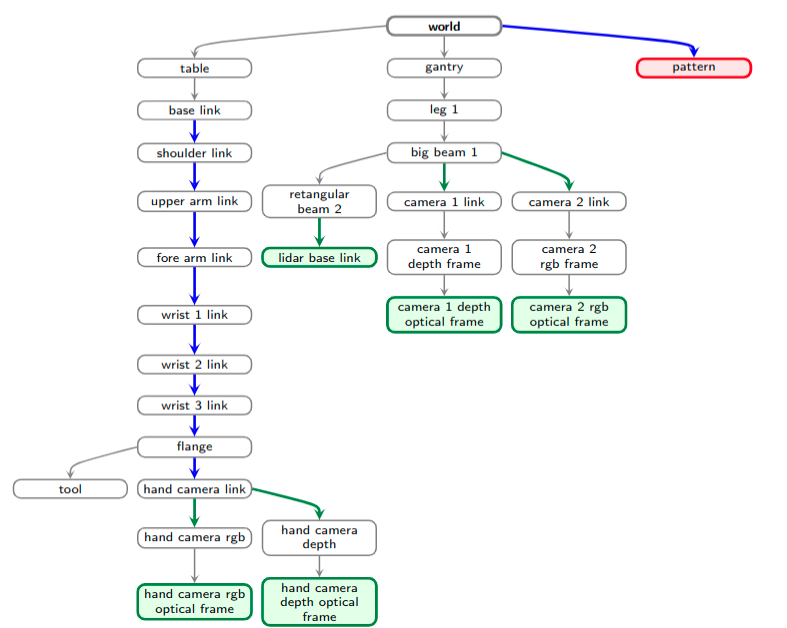

The following image shows the transformation tree of the system. Blue arrows represent dynamic transformations. Green arrows represent the transformation that will be calibrated during optimization. The red node represents the calibration pattern and the green nodes represent the sensor links from which data is output. This transformation tree shows how complex the system is.

This system was calibrated in both real and simulated environment and the results were compared with the factory calibration and with other algorithms. The following tables descibres the conducted experiments and results so far.

Issues of the month

- Is RGB calibration really right? - open

- Generating json file for factory calibration of RGB-D cameras - closed

- Fixing rgb to rgb evaluation - open

- Evaluation metrics vary a lot from dataset from dataset - closed

- Calibrate not deleting collections that do not have at least one RGB detection - closed

- Problem with LiDAR - Depth evaluation - closed

- Error when calibrating larcc subset - closed

- Error running dataset_playback - closed

- Depth labeling not excluding image edges (both automatic and manual) - closed

- RGB labelling crashing after PR merging - closed

- Click on image to change the seed point for depth labeling doesn't always work - closed

- configure_calibration_package not able to resolve topic names with \ - open

- Lidar to depth evaluation also crashes when there is an incomplete collection - closed

- Why is the evaluation table style for the lidar_to_depth_evaluation is different from all of the other evaluation table in every other sensor pair? - closed

- Depth to RGB evaluation failing when there is incomplete collections - closed

- Suggestion: Add inspect_dataset to documentation - closed

- Problems with documentation - open

- Depth label should be able to ignore outliters - open

- Calibrated urdf is being saved in the wrong folder - closed

- Improve manual labelling for depth sensors (simulation not working well) - closed

- Documentation is wrong - closed